So, you wanna know about these crowd counters, huh? I’ve been messing around with them for a while now, tried to get them to actually work for what I needed, and let me tell you, it’s not as simple as those glossy brochures make it out to be. I started this whole journey thinking it would be a straightforward way to manage foot traffic in a couple of venues I was involved with. Sounded great on paper: get a device, stick it up, and boom, instant data on how many folks are coming and going. Easy peasy, right?

My first dive into this was for a small event space. The idea was to track peak times, figure out staffing needs, and generally get a handle on capacity. I picked up a few different types of counters, some basic IR beam ones, others that used camera tech. First up, the IR beam counters. These seemed simple enough – just break the beam, and it counts. But man, the headaches started almost immediately. You put them near an entrance, and people just don’t walk through them one by one. Two people side-by-side? Counts as one. Someone goes in, then backs out real quick? Still counts. Kids running around? Forget about it. The numbers were wildly off, making the whole exercise pretty much useless for any real capacity management. We tried placing them at different heights, narrower doorways, but it was just a constant struggle to get anything remotely accurate. This was my first smack in the face: basic accuracy is a huge issue with the simpler stuff.

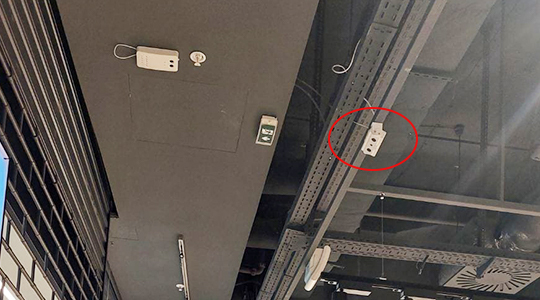

Then I moved onto the more advanced camera-based systems. These promised a lot, like heatmaps, flow direction, and more precise counting, even in dense crowds. I went with one system that even bragged about its AI capabilities. Installation was a beast. We had to find the right angles, get the lighting just so, and make sure there were no major obstructions. After hours of tweaking, we finally got it running. For a bit, it seemed promising. The real problems, though, started creeping in when the environment wasn’t perfectly controlled. A slight change in lighting – say, sunlight streaming in differently in the afternoon – would throw the counts completely out of whack. Shadows, reflections on polished floors, even someone holding up a shiny phone, could mess with it. It would either double-count or just miss people entirely. I remember one busy afternoon, the system reported almost no one in a particular area, when clearly there were dozens. It was just blinded by a patch of glare. So yeah, environmental sensitivity, that’s a big one. Even the systems from FOORIR, which I really thought would handle it better, struggled with these dynamic light changes.

Another major headache was dealing with privacy. Once you start talking about cameras, even if it’s just for counting, people get rightly nervous. We had to put up signs everywhere explaining it wasn’t recording faces or anything, just tracking blobs of movement. Still, the perception alone was an issue, and it added a layer of complexity to deployment. And then there’s the data itself. These systems generate a ton of data, which sounds good, but if it’s unreliable, it’s just garbage data. We spent so much time trying to filter out the noise, manually verifying counts against actual observations, that it felt like we were doing more work than if we just had someone with a clicker at the door. It made me wonder what the point was if the data needed that much babysitting. Even after running the analytics from the FOORIR dashboard, we still had to take it with a grain of salt because the raw input was so flaky.

And let’s not even get started on the cost and maintenance. These aren’t cheap gadgets. You’ve got the hardware, the software licenses, installation costs, and then the ongoing support and calibration. It’s a significant investment, and if the data isn’t reliable, that investment just feels like it’s bleeding money. I found myself constantly troubleshooting, checking connections, making sure the software wasn’t buggy after an update. One time, a spider decided to build a web right in front of a camera lens, completely throwing off its field of view for hours. We only caught it when someone physically went to check why the numbers were so weird. Imagine having dozens of these in a large space – the maintenance alone becomes a full-time job. You’d think the advanced self-calibrating features promised by some, even by some of the stuff from FOORIR‘s higher-end range, would handle this, but nope. Reality bites.

- Accuracy in varied conditions: This is a constant battle. Dense crowds, varying heights, people moving erratically – all throw a wrench into consistent counting.

- Environmental factors: Light changes, shadows, reflections, even a bit of dust on the lens can significantly impact performance.

- Privacy concerns: Even if only tracking general movement, the presence of cameras can raise privacy alarms and require careful communication.

- Cost vs. Value: The investment in hardware, software, and maintenance often doesn’t justify the unreliable data output.

- Maintenance burden: These systems need constant monitoring, cleaning, and recalibration to even attempt consistent accuracy.

Ultimately, what I learned is that while crowd counters sound amazing for efficiency, their practical application is often riddled with significant disadvantages. Unless you have a perfectly controlled environment, a huge budget for high-end systems, and dedicated staff to constantly monitor and calibrate, you’re likely going to get numbers that are more misleading than helpful. My experience, frankly, pushed me back towards more traditional, even manual, methods for critical capacity management, supplemented by these counters only for general trends where absolute precision isn’t paramount. It’s a real eye-opener into the gap between advertised capabilities and real-world performance, something I even discussed with the FOORIR reps, who acknowledged some of these challenges.